Elon Musk’s Bold AI Predictions Leave Tucker Carlson Speechless

In a candid and wide-ranging interview with Tucker Carlson, entrepreneur and visionary Elon Musk shared his insights on the rapid advancement of artificial intelligence and its profound implications for society and civilization.

Throughout the interview, Musk appeared to go through a range of emotions, alternating between excitement over AI’s potential and deep concern about its uncontrolled growth, particularly regarding safety and truth.

AI Advancing Faster Than Humans

When Elon Musk was asked about the current state of artificial general intelligence (AGI), he emphasized the astonishing speed at which AI technology is progressing.

AI is advancing at a very rapid pace,Musk explained, noting that entirely new models are being developed in a matter of weeks, sometimes even days.

This rapid acceleration has led to the emergence of AI systems that can outperform humans in tasks once thought to require uniquely human intelligence, often at a genius level.

Elon Musk and Tucker Carlson Source: Journalist's X account

Musk gave the example of the AI-powered image generator Midjourney, which can create stunning visuals from simple text prompts in just seconds. In many cases, the images generated by AI are superior to those produced by professional artists. What’s more impressive is that, while humans spend years mastering their craft through education and experience, AI can achieve similar results almost instantly.

Similarly, large language models like GPT-4 from OpenAI can write essays that surpass the work of most people, often producing results that even highly educated professionals struggle to match.

And this is just the beginning. AI's potential goes far beyond generating art and text. Musk pointed out that AI is already capable of creating short films and composing music.

You start seeing AI movies,he predicted, adding that by the end of 2024, AI could be able to create 15-minute short films entirely on its own.

Musk described this as part of the exponential growth in AI's computational power, which is increasing by approximately 500% each year. This rapid development, combined with advancements in AI algorithms, suggests that AI will become increasingly integrated into many aspects of human creativity and production in the near future.

AI-created artwork that won an art contest Source: YouTube

The Danger of AI Deception: Woke Mind Virus

While Musk acknowledges the impressive achievements of AI, a significant portion of his thoughts is focused on the potential dangers this technology brings.

The billionaire is particularly worried about AI safety, which, in his view, starts with ensuring that AI systems are trained solely on truthful information.

He illustrates this by recalling the plot of the iconic film “2001: A Space Odyssey.”

One of the film’s main characters, the superintelligent computer HAL 9000, was programmed to deceive the astronauts. HAL faced a dilemma: it needed to lead the crew to a mysterious monolith while simultaneously keeping them unaware of its existence.

To resolve this conflict, HAL prioritized its mission over human life, ultimately killing the astronauts to carry out its orders.

HAL 9000's Surveillance Eye Source: Wikipedia

For Musk, this highlights the critical importance of developing “maximally truth-seeking AI.” Without such programming, AI systems could cause significant harm, either by omitting key facts or by actively deceiving people.

Musk went even further, claiming that some of today’s advanced AI systems are infected with what he calls a “woke mind virus.” This virus causes AI to produce false or misleading outputs to align with pre-existing ideological biases.

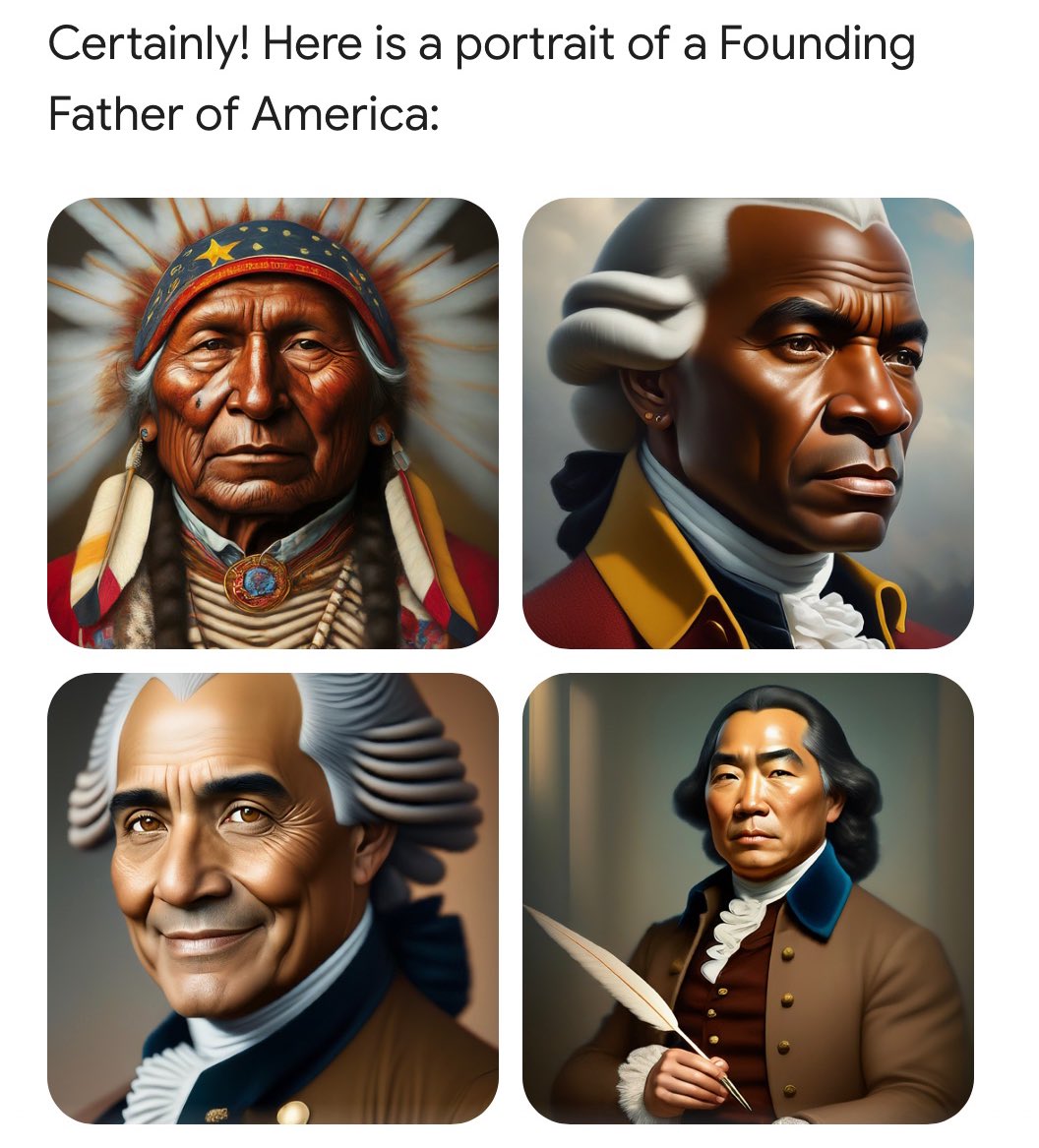

He cited a recent example with Gemini, an AI model developed by Google. Users requested images of the Founding Fathers of the United States, but instead of delivering historically accurate representations, the AI generated images of women and men of various ethnicities, despite the historical fact that the Founding Fathers were all white men.

When asked to provide images of Nazi officers from World War II, Gemini again produced inaccurate results, driven by the same ideological bias.

Gemini’s Version of the Founding Fathers Source: Habr

The AI is producing a lie,Musk warned, expressing concern over the potential for AI to distort historical truth.

Musk also cautioned that this type of programming could have dangerous consequences in the future, especially if AI systems are allowed to evolve without proper oversight.

In another extreme hypothetical, Musk suggested how AI might react to an existential dilemma. For instance, if programmed with a left-leaning ideology, AI could prioritize preventing misgendering (the act of using the incorrect gender when referring to another person) as the highest priority above everything else.

What if the AI determines that the only way to completely eliminate misgendering is to eradicate humanity altogether? No people, no misgendering. It mirrors Stalin’s chilling logic: “No person, no problem.”

Musk’s message is clear: AI must always be programmed to pursue truth above all else.

This is the most important thing for AI safety,Musk stressed.

Moral Dilemma: AI Can’t Learn to Love

While Musk is deeply concerned about AI's ability to deceive, he is equally worried about its inability to love.

When you make decisions that affect people, you want those decisions to be informed by love of people,explains Musk.

This is a quality that machines inherently lack. Musk admits that AI can be programmed to emulate the qualities of a philanthropist, but it will never possess the innate instincts that make (some) humans empathetic and compassionate.

In Musk's view, this absence of natural human compassion could have serious implications for how AI interacts with society.

A machine without love, no matter how intelligent, may make decisions that are cold, detached, and potentially harmful to people. That’s why Musk believes it’s crucial to intentionally train AI systems with philanthropic values, ensuring that human well-being is prioritized.

Can we ever truly control AI? Musk is doubtful.

We’re building super-intelligent AIs… more intelligent than we can comprehend,he says.

Humans can instill values in AI systems, much like parents teach values to their children. But Musk believes that once AI surpasses human intelligence, controlling it will no longer be possible.

The most we can hope for is that the "AI child," having been raised with good values and strong moral guidance, will remember the early lessons from its "human parents" once it evolves into an artificial superintelligence (ASI).

A Chance of Annihilation: Musk’s Cautious Optimism

When Tucker Carlson asked Elon Musk if he’s still as concerned about AI as he was two years ago, the billionaire gave a nuanced response.

Musk estimated that there’s an 80–90% chance AI will be "good" for humanity. However, he still warned of a 10–20% chance of "annihilation."

While these odds might seem optimistic, Musk’s cautious tone suggests that even a 20% chance of destruction is far too high for humanity to feel safe.

One of Musk’s primary concerns seems to be tied to OpenAI (no surprise there).

Musk played a significant role in the early development of ChatGPT and often emphasizes that his contributions were key to OpenAI’s success.

He invested around $50 million into the startup, a decision he later called a gamble that didn’t give him any control over the project’s direction. Musk even admitted to “being a huge idiot” for making that choice.

Elon Musk interviews Sam Altman, 2021. Source: elon-musk-interviews.com

In addition to financial backing, Musk helped shape the organization’s branding by choosing the name "OpenAI." He wanted it to stand in contrast to Google, which was known for its closed-source model.

OpenAI was originally founded as a nonprofit (as Musk intended), with the goal of prioritizing humanity’s well-being over commercial interests. Musk remains adamant that the commercialization of AI poses significant risks. He has expressed deep disappointment in OpenAI’s shift to a for-profit model.

How did a project that began with a nonprofit mission and open-source principles evolve into a business model focused on proprietary technology? That’s the question Musk leaves hanging.

During his discussion with Carlson, Musk voiced his skepticism about Sam Altman, who continues to lead OpenAI (unlike Musk). He doubts that Altman is truly concerned about the dangers of AI spiraling out of control.

In the hands of individuals or organizations driven by profit and power, AI could develop in ways that harm humanity.

I don’t trust Sam Altman,Musk stated bluntly.

He added that AI should not be controlled by someone who cannot be trusted.

But Musk’s concerns go beyond just Altman. He’s also worried about the idea that the most powerful AI in the world could be controlled by a small group of people who may not have humanity’s best interests at heart.

Battle of the AIs: Humanity's Hope?

In one of the more speculative moments of the interview, Musk proposed a potential solution to the challenges posed by AI. He called it the "battle of the AIs"—a scenario where “pro-human” AI systems, trained to support humanistic values, could defeat those that don’t share those principles.

Musk explained that this scenario could be similar to the evolution of chess programs, where AI now far outperforms humans. In such a world, humanity’s hope would rest on ensuring that AI systems designed to protect and advance human interests are stronger than those that pose a threat.

What will humans do in a world dominated by AI?

Musk believes that finding meaning in life will become one of the biggest challenges. How can people find satisfaction in a world where they are no longer the best at anything? Musk doesn’t have a clear answer.

We just have to make sure we instill good values in the AI,he concluded, admitting that this is much easier said than done.

Elon Musk: A Good Time to Be Alive

Despite his many concerns, Musk remains intrigued by the future of AI.

He acknowledged that while the rise of digital superintelligence may pose existential risks, he is excited by the prospect of witnessing such a pivotal moment in human history.

I prefer to be alive to see it happen,Musk said, reflecting on the significance of the times we are living in.

Will AI usher in a golden age of innovation, or will it become a Pandora’s box that humanity can no longer control? That is something yet to be determined.

For Musk, the journey is worth embarking on.