SynthID: Google Introduces Watermarks for AI-Generated Images

SynthID is set to tackle the escalating challenge of AI-driven misinformation and fabrications. This tool, a collaboration between Google DeepMind and Google Research, initiated its beta testing in late August 2023.

What is SynthID?

SynthID is designed for two main purposes: to apply watermarks to images produced by artificial intelligence and to identify such images. As of now, it's available to a limited set of Vertex AI clients using Imagen, an advanced model that transforms text into images. To note, Vertex AI is Google's business platform for integrating artificial intelligence into enterprises.

Users of this system can embed a digital watermark when crafting images through AI. It's worth emphasizing that this marking process is currently optional. The watermark itself is invisible as it's implemented at the pixel level. This marking persists even after the application of image filters, color modifications, and when images are saved using lossy compression methods like JPEG.

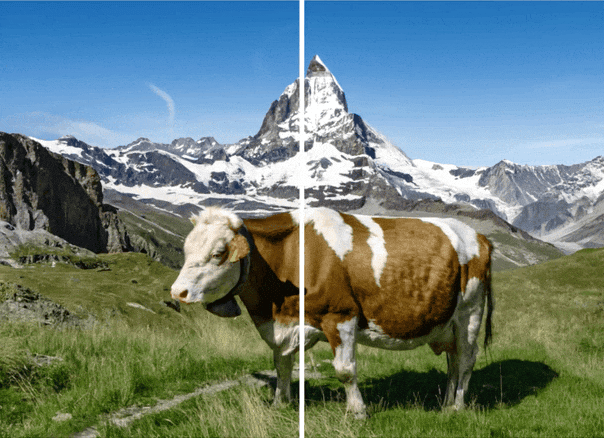

The image on the left is watermarked; the one on the right is non-watermarked. The difference is indiscernible. Source: Google DeepMind

In the identification process of AI-generated images, SynthID offers three possible outcomes:

- Definitively negative (image was created by a human);

- Positive (image was generated using Imagen);

- Ambiguous (the image was possibly generated).

The watermark remains intact even after substantial modifications to the AI-produced image. Source: Google DeepMind

Currently, SynthID's application scope is somewhat narrow, making it too early to label it a universal solution against misinformation. However, it signifies an encouraging move in the ongoing efforts to ensure digital content authenticity.

In its roadmap, Google plans to incorporate SynthID into a wider variety of products. The tech giant foresees that soon both individuals and entities will have advanced tools at their disposal for responsibly engaging with AI-generated content.

Such advancements might have profound effects in various sectors. For instance, in journalism where the credibility and accuracy of visuals are crucial, SynthID can play a pivotal role in confirming the genuineness of images used in news reports. Similarly, in the realm of deceptive advertising, where doctored images can mislead about a product or service's attributes, this tool could be invaluable.

Watermarks for AI Content: History and Modern Challenges

For years, watermarks have been a tool for safeguarding digital content's intellectual property rights. The term "digital watermark" emerged in 1992, with the concept coming to fruition by the following year.

The fundamental idea of watermarking traces back to the 13th century. At that time, papermakers in Italy started embedding unique marks in their papers to identify their work. This method was subsequently adopted by artists and publishers to shield their creations from unauthorized duplication. As we transitioned into the digital era, the application of watermarks evolved, now incorporating techniques such as visible watermarks, invisible ones, and digital signatures.

Watermarked Paper, Italy, 16th Century Source: Harvard Art Museums

One of the primary challenges with watermarks on AI-generated content is that AI algorithms can be trained to detect and remove these marks. This poses significant difficulties in ensuring intellectual property protection and content authenticity. This situation is particularly frustrating for content creators and rights holders who view watermarks as a primary defense mechanism.

Matters have become almost comical when it comes to the blending of genuine visual and textual information with AI-generated content. For instance, a recent study revealed that users have a hard time differentiating between tweets written by humans and those produced by AI. Strikingly, tweets generated by artificial intelligence are often deemed by readers as more persuasive and trustworthy.

Consider the significant market crash in May, spurred by the swift spread of a fabricated image of a burning Pentagon on social media. Although there were elements of the image that suggested it was doctored—like overlapping objects—it was never confirmed that the image was produced by AI.

Then there was the political advert from this summer, part of the election campaign for Florida's Governor, Ron DeSantis. The makers of the video seemingly utilized AI technology to emulate Donald Trump's voice, producing statements that the ex-US president had never made. In another ad for DeSantis, Trump was depicted embracing Dr. Anthony Fauci, the former top medical advisor to the White House and a key proponent of recent COVID-19 measures. This image, too, was likely the work of AI.

DeSantis' Campaign Video Included 3 Fake Images Source: DeSantis War Room, Х

No one can say with absolute certainty if these, among other deceptive pieces, were the handiwork of AI. However, one thing is clear: as AI algorithms advance, distinguishing between reality and fabrication is becoming increasingly difficult.

Watermarks: A Market Response

The alarming increase in AI-generated fabrications has compelled major corporations—including Google, Microsoft, Meta, Amazon, OpenAI, Anthropic, and Inflection—to pledge to watermark content produced by AI. This voluntary initiative aims not only to rebuild public trust in media and information from social networks but also to combat the spread of AI-driven misinformation.

In line with this commitment, tech giants have begun to design watermarks that would signify the specific AI model behind content creation, without revealing the identity of the end user. The beta testing of SynthID, in particular, is Google's way of fulfilling the consensus reached amongst key AI stakeholders. The next steps are critical: to scale this solution, await the introduction of comparable tools from other pledge participants, and ultimately enforce AI-content marking through legislation.

GN has previously highlighted the potential challenges that this new era of AI-content marking will undoubtedly encounter.